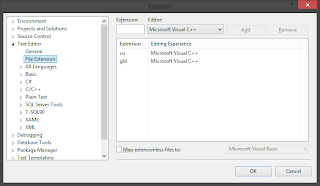

This time I plan to concentrate just on optimising my vector addition a little bit. In my previous example I had a statically defined number of blocks and threads used by my kernel 'AddVectorsKernel'. This time I'd like to make it a bit more adjustable. I also got a slightly better syntax highlighter because the old one was pretty much unreadable.

To begin with, this is my vanilla code without the CPU vector addition, just plain CUDA. I will be introducing and explaining some changes to it as I go along.

#include <cuda.h>

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <stdio.h>

#include <time.h>

#pragma comment(lib, "cudart")

typedef struct

{

float *content;

const unsigned int size;

} pjVector_t;

__global__ void AddVectorsKernel(float *firstVector, float *secondVector, float *resultVector)

{

unsigned int index = threadIdx.x + blockIdx.x * blockDim.x;

resultVector[index] = firstVector[index] + secondVector[index];

}

int main(void)

{

const unsigned int vectorLength = 1000000;

const unsigned int blocks = 1000;

const unsigned int threads = 1000;

const unsigned int vectorSize = sizeof(float) * vectorLength;

pjVector_t firstVector = { (float *)calloc(vectorLength, sizeof(float)), vectorLength };

pjVector_t secondVector = { (float *)calloc(vectorLength, sizeof(float)), vectorLength };

pjVector_t resultVector = { (float *)calloc(vectorLength, sizeof(float)), vectorLength };

float *d_firstVector;

float *d_secondVector;

float *d_resultVector;

cudaMalloc((void **)&d_firstVector, vectorSize);

cudaMalloc((void **)&d_secondVector, vectorSize);

cudaMalloc((void **)&d_resultVector, vectorSize);

for (unsigned int i = 0; i < vectorLength; i++)

{

firstVector.content[i] = 1.0f;

secondVector.content[i] = 2.0f;

}

cudaMemcpy(d_firstVector, firstVector.content, vectorSize, cudaMemcpyHostToDevice);

cudaMemcpy(d_secondVector, secondVector.content, vectorSize, cudaMemcpyHostToDevice);

AddVectorsKernel<<<blocks, threads>>>(d_firstVector, d_secondVector, d_resultVector);

cudaMemcpy(resultVector.content, d_resultVector, vectorSize, cudaMemcpyDeviceToHost);

free(firstVector.content);

free(secondVector.content);

free(resultVector.content);

cudaFree(d_firstVector);

cudaFree(d_secondVector);

cudaFree(d_resultVector);

cudaDeviceReset();

return 0;

}

First of all, I'm going to modify the number of threads per block to be as high as possible on my machine to decrease the number of used blocks. To do this, I'm calling cudaGetDeviceProperties function and reading the maxThreadsPerBlock field from the populated structure cudaDeviceProp. I also got rid of const keyword in for blocks and threads since they are populated dynamically.

#include <cuda.h>

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <stdio.h>

#include <math.h>

#define VECTOR_LENGTH 1000000

#pragma comment(lib, "cudart")

typedef struct

{

float *content;

const unsigned int size;

} pjVector_t;

__global__ void AddVectorsKernel(float *firstVector, float *secondVector, float *resultVector)

{

unsigned int index = threadIdx.x + blockIdx.x * blockDim.x;

if(index < VECTOR_LENGTH)

{

resultVector[index] = firstVector[index] + secondVector[index];

}

}

int main(void)

{

const unsigned int vectorSize = sizeof(float) * VECTOR_LENGTH;

int threads = 0;

unsigned int blocks = 0;

cudaDeviceProp deviceProperties;

cudaGetDeviceProperties(&deviceProperties, 0);

threads = deviceProperties.maxThreadsPerBlock;

blocks = (unsigned int)ceil(VECTOR_LENGTH / (double)threads);

pjVector_t firstVector = { (float *)calloc(VECTOR_LENGTH, sizeof(float)), VECTOR_LENGTH };

pjVector_t secondVector = { (float *)calloc(VECTOR_LENGTH, sizeof(float)), VECTOR_LENGTH };

pjVector_t resultVector = { (float *)calloc(VECTOR_LENGTH, sizeof(float)), VECTOR_LENGTH };

float *d_firstVector;

float *d_secondVector;

float *d_resultVector;

cudaMalloc((void **)&d_firstVector, vectorSize);

cudaMalloc((void **)&d_secondVector, vectorSize);

cudaMalloc((void **)&d_resultVector, vectorSize);

for (unsigned int i = 0; i < VECTOR_LENGTH; i++)

{

firstVector.content[i] = 1.0f;

secondVector.content[i] = 2.0f;

}

cudaMemcpy(d_firstVector, firstVector.content, vectorSize, cudaMemcpyHostToDevice);

cudaMemcpy(d_secondVector, secondVector.content, vectorSize, cudaMemcpyHostToDevice);

AddVectorsKernel<<<blocks, threads>>>(d_firstVector, d_secondVector, d_resultVector);

cudaMemcpy(resultVector.content, d_resultVector, vectorSize, cudaMemcpyDeviceToHost);

free(firstVector.content);

free(secondVector.content);

free(resultVector.content);

cudaFree(d_firstVector);

cudaFree(d_secondVector);

cudaFree(d_resultVector);

cudaDeviceReset();

return 0;

}

After running this code, performance dropped to 833[µs] (~5%). 'Did I do something wrong?', I was asking myself. And actually I did - that 'if' statement in the kernel code was that 'something wrong'. Or maybe not entirely wrong, but not very well placed. I thought initially: how come now, since I'm utilising all possible threads in every block (well, maybe except the last one - it will have 448 unused threads, 977 * 1024 - 1000000 = 448), my kernel uses more time to do its job than previously, where the number of unused threads was a lot (~53 times!) higher? The answer was that 'if' - I quickly realised that every thread in every block had to evaluate it 1 million (+448) times. Why do that since I can precisely adjust the length of my vector (+ that 448 elements) so that 'if' is not needed anymore and I will never go outside my vector in the kernel?

I'm obviously just playing with the toolkit right now so it's not a problem to add something here and tweak something there to achieve my goal, but even in the real life scenario (which I highly doubt this code will ever be used for!) you could do the same thing - add some extra elements to you vector and fill them with zeros not to check if you're within your vector in the kernel code.

Let's do some (integer!) math:

-

Unused threads in my previous approach: (1024 - 1000) * 1000 = 24000 (!)

Redundant threads: 0 (all working threads are important)

index checked: 0 times

-

Unused threads in my current approach: ((1000000 / 1024) * 1024) - 1000000 + 1024 = -576 + 1024 = 448 (they are doing nothing)

Redundant threads: 0 (all working threads are important)

index checked: 1000448 times (!)

-

Unused threads in my new approach: 0 (all are used)

Redundant threads: 1000448 - 1000000 = 448 (they are just adding zeros)

index checked: 0 times

Now let's see some code (I'm not filling these last 448 vector items with zeros, but you get the idea):

#include <cuda.h>

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <stdio.h>

#include <math.h>

#define VECTOR_LENGTH 1000448

#pragma comment(lib, "cudart")

typedef struct

{

float *content;

const unsigned int size;

} pjVector_t;

__global__ void AddVectorsKernel(float *firstVector, float *secondVector, float *resultVector)

{

unsigned int index = threadIdx.x + blockIdx.x * blockDim.x;

resultVector[index] = firstVector[index] + secondVector[index];

}

int main(void)

{

const unsigned int vectorSize = sizeof(float) * VECTOR_LENGTH;

int threads = 0;

unsigned int blocks = 0;

cudaDeviceProp deviceProperties;

cudaGetDeviceProperties(&deviceProperties, 0);

threads = deviceProperties.maxThreadsPerBlock;

blocks = (unsigned int)ceil(VECTOR_LENGTH / (double)threads);

pjVector_t firstVector = { (float *)calloc(VECTOR_LENGTH, sizeof(float)), VECTOR_LENGTH };

pjVector_t secondVector = { (float *)calloc(VECTOR_LENGTH, sizeof(float)), VECTOR_LENGTH };

pjVector_t resultVector = { (float *)calloc(VECTOR_LENGTH, sizeof(float)), VECTOR_LENGTH };

float *d_firstVector;

float *d_secondVector;

float *d_resultVector;

cudaMalloc((void **)&d_firstVector, vectorSize);

cudaMalloc((void **)&d_secondVector, vectorSize);

cudaMalloc((void **)&d_resultVector, vectorSize);

for (unsigned int i = 0; i < VECTOR_LENGTH; i++)

{

firstVector.content[i] = 1.0f;

secondVector.content[i] = 2.0f;

}

cudaMemcpy(d_firstVector, firstVector.content, vectorSize, cudaMemcpyHostToDevice);

cudaMemcpy(d_secondVector, secondVector.content, vectorSize, cudaMemcpyHostToDevice);

AddVectorsKernel<<<blocks, threads>>>(d_firstVector, d_secondVector, d_resultVector);

cudaMemcpy(resultVector.content, d_resultVector, vectorSize, cudaMemcpyDeviceToHost);

free(firstVector.content);

free(secondVector.content);

free(resultVector.content);

cudaFree(d_firstVector);

cudaFree(d_secondVector);

cudaFree(d_resultVector);

cudaDeviceReset();

return 0;

}

And there it is: 756.479[µs] - time needed to process more data was actually ~5% shorter!

That's it for now, thanks for reading, I hope it was useful or at least interesting.